I've conducted research on various aspects of image quality (IQ) and video quality (VQ) in medical imaging applications. I've grouped my work into the following categories, read on or jump to a topic:

Acknowledgements:

Projects,

Collaborations,

Organizations

Video Quality Algorithms

IQ/VQ algorithms estimate the quality of an image/video by attempting to mimic or predict human judgment/performance. They can be used to optimize image acquisition, image processing, and visualization parameters without having to conduct time-consuming experiments with human observers - as part of in-silico clinical trials.

I have developed an algorithm for a medical application and contributed to a general video quality metric:

-

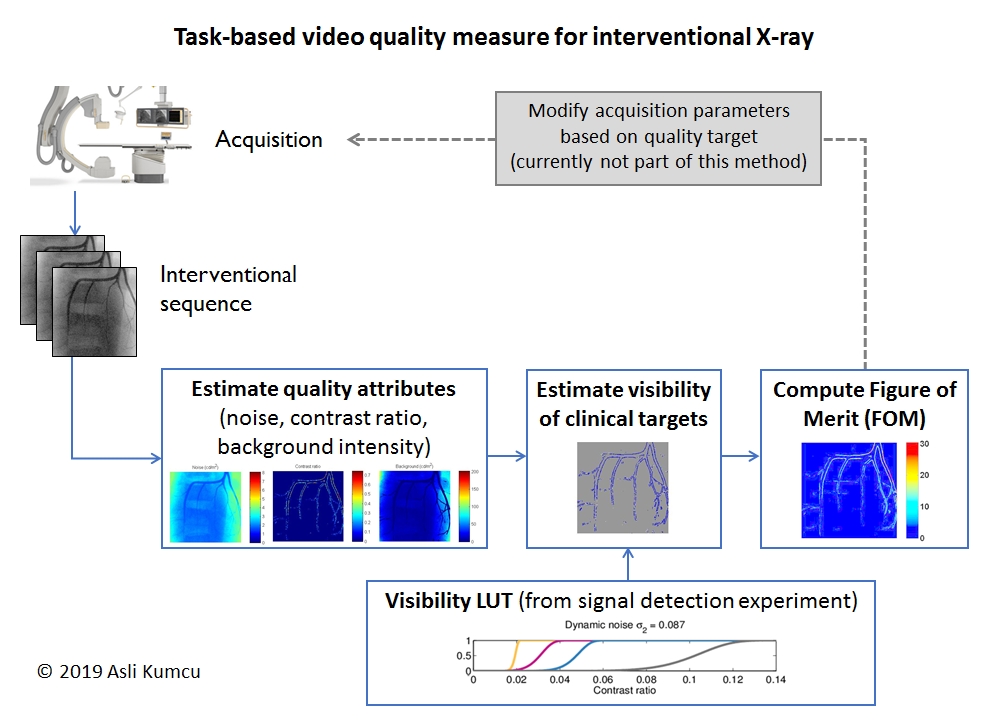

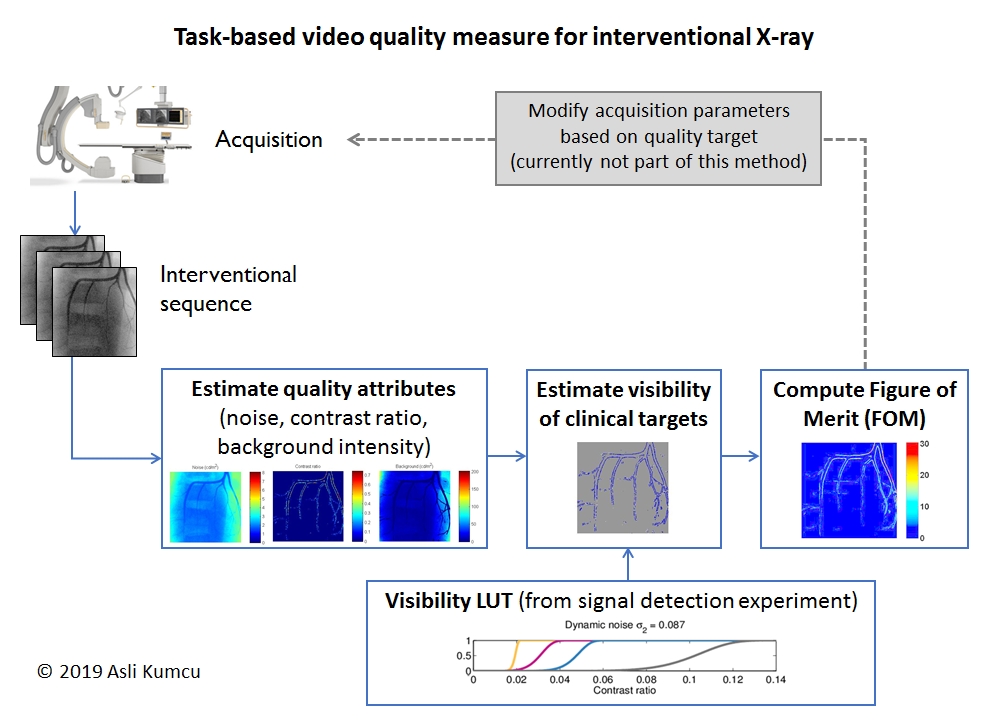

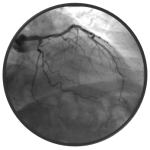

A task-based medical quality measure for interventional X-ray (conference paper (in print),

conference presentation and demo). This algorithm identifies blood vessels in cardiac and neurological fluoroscopy/angiography sequences and predicts the video quality level. It incorporates a psychovisual model to predict the visibility of clinical targets (conference paper). The model was developed from a set of studies measuring the psychometric function for contrast detection as a function of noise type (static, dynamic), noise level, and background intensity. Our results show high correlation with dose levels.

- A. Kumcu, L. Platiša, B. Goossens, A. J. Gislason-Lee, A. G. Davies, G. Schouten, D. Buytaert, K. Bacher, W. Philips, "A visibility overshoot index for interventional X-ray image quality assessment", in Medical Imaging 2023: Image Perception, Observer Performance, and Technology Assessment, C. R. Mello-Thoms, Y. Chen, Eds., SPIE, pp. 124670T-1–12, 2023.

- A. Kumcu, L. Platiša, B. Goossens, A. J. Gislason-Lee, A. G. Davies, G. G. Schouten, D. Buytaert, K. Bacher, W. Philips, "Interventional X-ray quality assessment using a visibility overshoot index", in Abstracts, Medical Image Perception Conference XIX, York, UK, July 17-20, pp. 1, 2022.

- A. Kumcu, B. Ortiz-Jaramillo, L. Platiša, B. Goossens, W. Philips, "Interventional X-ray quality measure based on a psychovisual detectability model", in Medical Image Perception Conference XVI, Ghent, Belgium, June 3-5, pp. 1, 2015

- A. Kumcu, L. Platiša and W. Philips, "Effects of static and dynamic image noise and background luminance on letter contrast threshold", in 7th International Workshop on Quality of Multimedia Experience (QoMEX), pp. 1–2, 2015

Click on image to enlarge

- Contribution to a full-reference video quality measure (co-author on conference paper). Algorithm developed by Benhur Ortiz-Jaramillo.

Assessment of Task Performance and User Behavior

Almost all human studies will include a measure of efficacy (or performance), such as the time to complete the task, if the task was completed, or whether the abnormality was detected.

Devices that generate video sequences, however, may require interaction between the doctor and the device, such as in laparoscopic surgery. Performance measures alone may not entirely capture how interactivity is affected by different imaging parameters.

Therefore we can measure two others aspects of device usability in addition to efficacy (e.g. accuracy, detection, diagnosis): efficiency (time, navigation behavior, perceived stress) and satisfaction (subjective preferences).

I have conducted two studies to assess the link between the different aspects of the usability of medical devices:

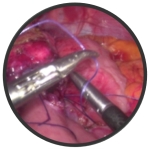

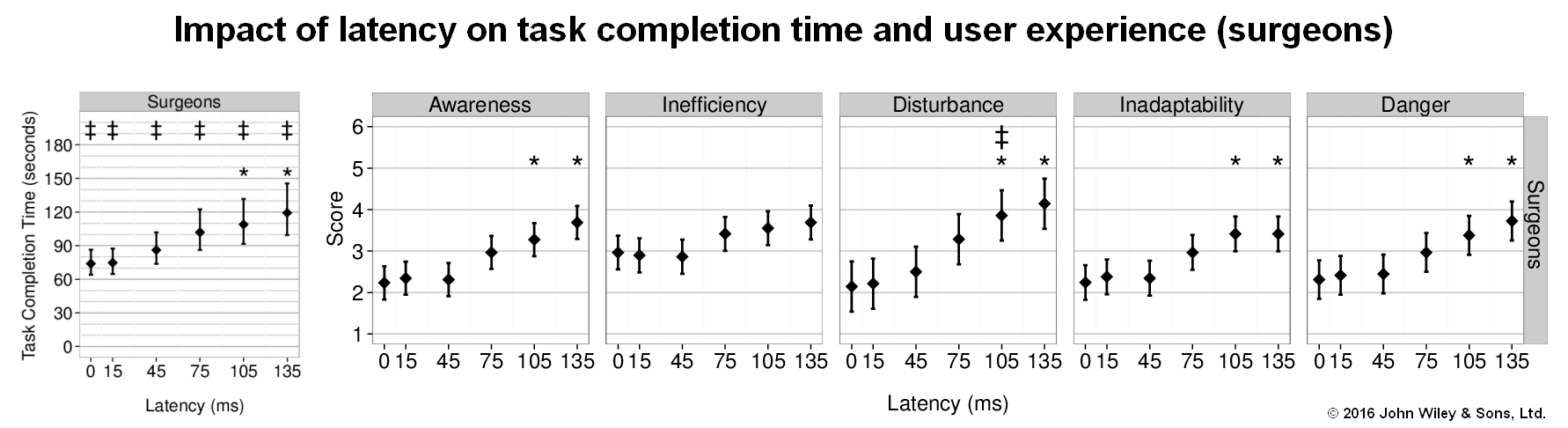

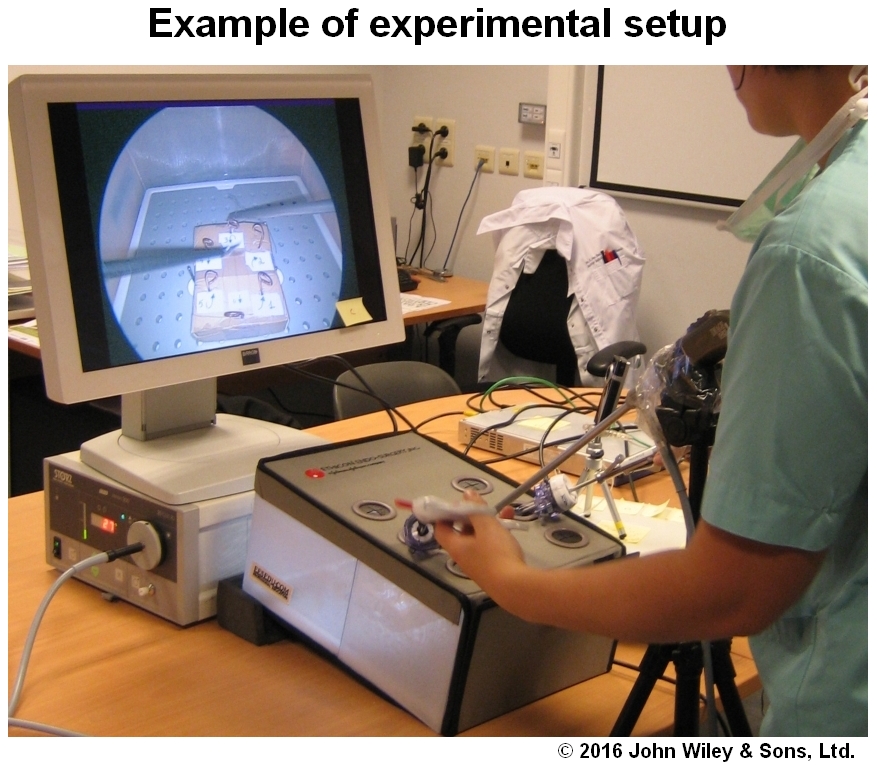

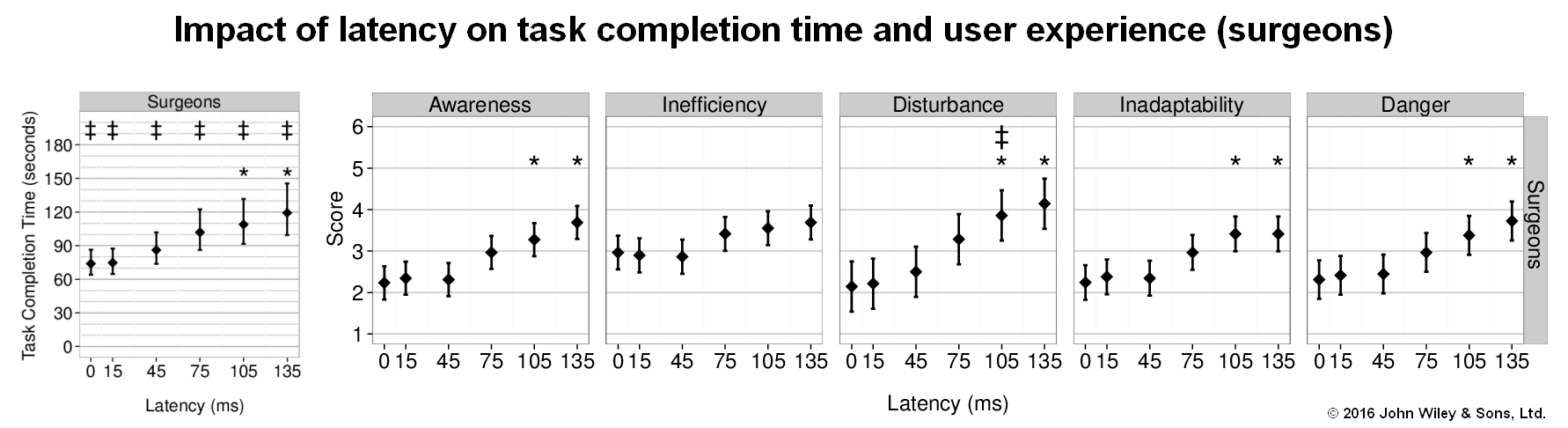

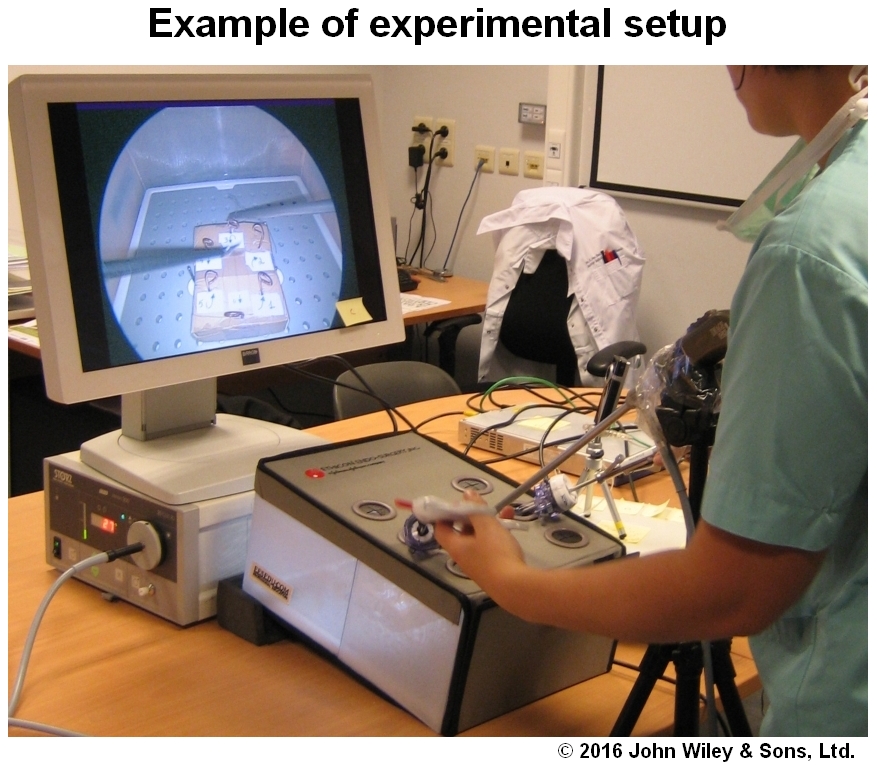

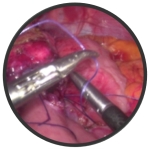

- Effect of video lag on laparoscopic surgery: correlation between performance and usability at low latencies (journal paper).

We evaluated the effect of video lag introduced by networking devices. A delay between the surgeon’s action and visualization of the action on a monitor may induce fatigue and compromise patient safety. We determined that even small amounts of video delay (105 milliseconds) increased the duration of the task (left plot below) and induced negative user experience (5 plots on right). Experienced surgeons were impacted more strongly than trainees.

- A. Kumcu, L. Vermeulen, S. A. Elprama, P. Duysburgh, L. Platiša, Y. Van Nieuwenhove, N. Van De Winkel, A. Jacobs, J. Van Looy, W. Philips, "Effect of video lag on laparoscopic surgery: correlation between performance and usability at low latencies", The International Journal of Medical Robotics and Computer Assisted Surgery, vol. 13, no. 2, pp. 1–10, 2017

Click on images to enlarge.

- Browsing behavior during scoring of simulated medical image sequences

(conference paper, [preprint pdf]). We measured browsing behavior as readers scored simulated single-slice and multi-slice images with simulated lesions. Reading time, the number of forward-backward repetitions through the stack, and the average number of slices per repetition were highest for False Negative cases, followed in decreasing order by False Positive, True Negative, and True Positive cases. Our results tend to concur with eye-tracking studies which report that readers fixate longer on regions subsequently scored incorrectly.

A. Kumcu, L. Platiša, M. Platiša, E. Vansteenkiste, K. Deblaere, A. Badano, W. Philips, "Reader behavior in a detection task using single- and multislice image datasets", in SPIE Medical Imaging 2012: Image Perception, Observer Performance, and Technology Assessment, pp. 831803-831803-13, 2012

Assessment of Diagnostic Performance

The gold standard in medical image quality assessment is diagnostic performance studies - typically a receiver operating characteristic (ROC)-based assessment paradigm. These studies quantify how well users can conduct a specific task using a particular imaging system - usually their ability to discriminate between abnormal and normal cases (cases with and without lesions). Systems with better discriminative ability (e.g. higher AUC) are considered to have better image quality.

We evaluated diagnostic performance for two clinical applications:

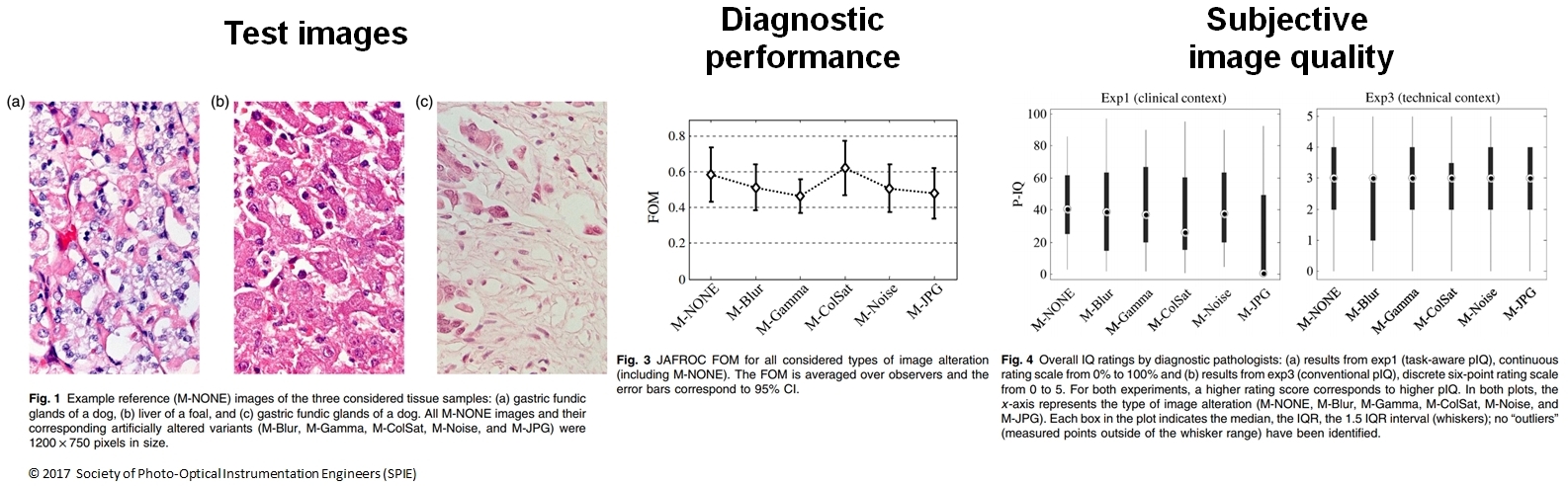

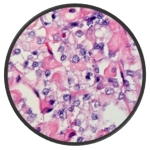

- Detection performance versus image quality preferences in digital pathology (co-author on journal paper).

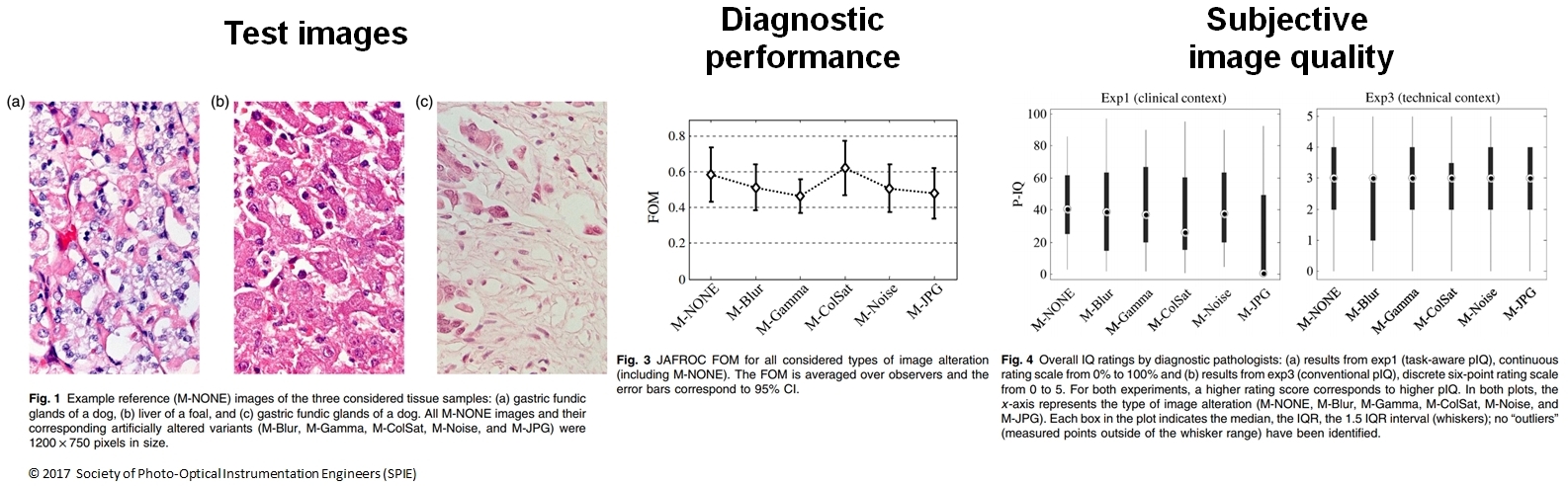

Our results suggest the need to define a clinical task. In the figure below, example images are shown on the left (Fig. 1). Image were manipulated to modify the amount of blur, gamma, color saturation, noise, and JPG compression. The observers participated in two experimental setups. In Exp1, they were instructions were to detect pathological abnormalities (detection task) and conduct image quality assessment (IQA). In Exp3, they conducted only IQA. Figure 3 below shows the detection performance (JAFROC) and Fig. 4. shows the IQ scores.

Results show correlation between detection performance and IQ scores was mixed (Figs. 3 vs 4); IQ scores had larger variation. Furthermore, the experimental setup affected the IQ scores (Fig. 4 left vs right).

For example, while JPG compression did not result in reduced detection performance (Fig. 3), observers rated its IQ very low in Exp1 (Fig. 4 left) but moderate in Exp3 (Fig. 4 right). Subjective preferences may not be a suitable proxy for diagnostic image quality and may give misleading information on the utility of conducting a clinical task with the images.

Study led by Ljiljana Platisa.

- L. Platiša, L. Van Brantegem, A. Kumcu, R. Ducatelle, W. Philips, "Influence of study design on digital pathology image quality evaluation: the need to define a clinical task", Journal of Medical Imaging, vol. 4, no. 2, pp. 1–12, 2017

Click on image to enlarge.

- Detection performance on single versus multislice image reading for varying background and signal content (co-author on

conference paper). Study led by Ljiljana Platisa.

We conducted two studies to evaluate the diagnostic performance of medical displays:

- DICOM calibration of medical stereoscopic (3D) displays (co-author on journal paper). Correct medical display calibration contributes to optimal diagnostic performance. In this study, the 3D contrast sensitivity function was measured for a 3D diagnostic display. Findings suggest that 2D DICOM calibration may be valid for 3D visualization.

See here for a summary of the study. Study led by Johanna Rousson.

- Validation of a new digital breast tomosynthesis medical display (co-author on conference paper).

Study led by Asli Kumcu.

Assessment of Subjective Image Quality

Subjective quality assessment (QA) consists of measuring the user's subjective opinion of perceived images/video quality, or the user's quality preferences.

In medical imaging, subjective QA is not a replacement for diagnostic studies, since it does not measure how useful an image is at doing its job: allowing the user to conduct a specific task, such as detecting a lesion. Subjective QA may still be useful for understanding the relationship between imaging parameters and the user's perception/experience, or for finding thresholds at which differences become perceptible. However, it is important to realize that subjective preference may not necessarily correlate with diagnostic performance - see here for a recent study we conducted on this question.

Subjective QA plays a larger role in non-medical applications, such as television or video streaming, since typically the goal in these applications is to develop imaging systems that produce visually appealing images and video. Typically we conduct our studies following ITU guidelines, such as Recommendation BT.500.

The following publications discuss methodological issues related to preparing and analyzing subjective QA studies:

- Quality of Experience in Medical Imaging and Healthcare task force conference paper on best practices for subjective quality assessment of medical images and video (co-author on conference paper). The paper is a combined effort of the Qualinet medical task force and written by Lucie Lévêque.

L. Lévêque, H. Liu, S. Baraković, J. B. Husić, M. Martini, M. Outtas, L. Zhang, A. Kumcu, L. Platiša, R. Rodrigues, A. Pinheiro, A. Skodras, "On the Subjective Assessment of the Perceived Quality of Medical Images and Videos", in 2018 Tenth International Conference on Quality of Multimedia Experience (QoMEX), pp. 1-6, 2018

- Analysis of experimental protocol and statistical analysis methods for clinical and commercial applications (journal paper).

A. Kumcu, K. Bombeke, L. Platiša, L. Jovanov, W. Philips, "Performance of four subjective video quality assessment protocols and impact of different rating pre-processing and analysis methods", IEEE Journal of Selected Topics in Signal Processing, vol. 11, no. 1, pp. 48-63, Feb 2017

- Methodology to select stimuli for video quality assessment studies; applied to interventional cardiac x-ray imaging and laparoscopic surgery video (conference paper, [preprint pdf]).

A. Kumcu, L. Platiša, H. Chen, A. J. Gislason-Lee, A. G. Davies, P. Schelkens, Y. Taeymans, W. Philips, "Selecting stimuli parameters for video quality assessment studies based on perceptual similarity distances", in Proc. SPIE, Image Processing: Algorithms and Systems XIII, pp. 93990F-93990F-10, 2015

In the following studies, we evaluated the effects of expertise, image content and imaging parameters on image quality preferences:

- Evaluation of the effects of expertise and content on the perceived quality of H.264/AVC compressed laparoscopic surgery video (conference paper, [preprint pdf]).

A. Kumcu, K. Bombeke, H. Chen, L. Jovanov, L. Platiša, H. Q. Luong, J. Van Looy, Y. Van Nieuwenhove, P. Schelkens, W. Philips, "Visual quality assessment of H.264/AVC compressed laparoscopic video", in SPIE Medical Imaging, Proceedings, pp. 90370A-90370A-12, 2014

- Assessment of the perceived quality of interventional cardiac x-ray sequences (co-author on conference paper). Study led by Amber Gislason-Lee.

- Subjective quality assessment of various degradations in stereoscopic medical images (co-author on conference paper). Study led by Johanna Rousson.

- Assessment of the perceived quality of degraded digital pathology images (co-author on conference presentation). Study led by Ljiljana Platisa. Related to the study Influence of study design on digital pathology image quality evaluation: the need to define a clinical task

- Subjective assessment of mechanical wear degradation for a materials science application (co-author on journal paper). Study led by Seyfollah Soleimani.

This research has been partially or wholly funded thanks to the following grants:

iMinds

Telesurgery

iMinds

CIMI

iMinds

3DTV