Object detection

Object detection algorithms operating on visible light alone have limited performance in adverse weather conditions. Effects such as fog, rain, flares from sunlight or incoming traffic can all make parts or the whole image to become corrupt with noise. This deteriorating effect is even more pronounced during hours when the ambient light is low. The system proposed on this page is designed to extract useful information from various data sources that in combination are invariant to the light conditions. RGB camera data is reinforced with depth images generated from a LiDAR and heat signatures from Thermal camera. By building and integrated detection model it is possible to detect the road users (pedestrians, cyclists and various vehicles) with high degree of confidence even when one of the sensors fails to perform.

Even when the light conditions are perfect, problems might arise when objects become occluded. In this situation depth data is crucial to distinguish between the various occluders. Secondly, because the depth data originates from an active light sensor, it is inherently invariant to light levels and the system can effectively detect occlusions in pith-dark.

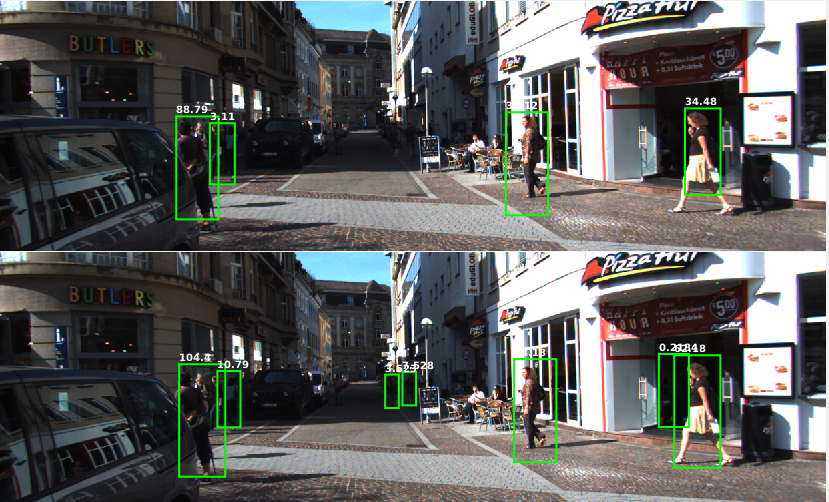

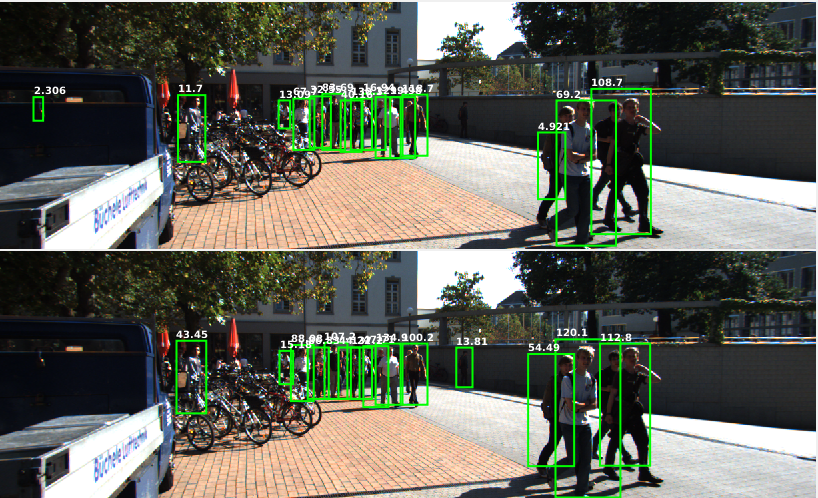

A most complicated scenario would be an occluded object standing immediately next to a background that has very similar appearance to it. In such a case, the addition of it's thermal signature is a sure way of making a distinction between the object and the background. In the following examples one can clearly notice the difficulty of detecting pedestrians and how the system benefits from the addition of depth data.

| Various examples where the top RGB only detector fails to detect difficult pedestrians and the bottom RGB+depth detector performs better. | |

|

|

|

|

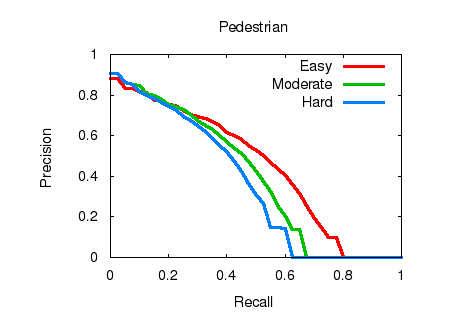

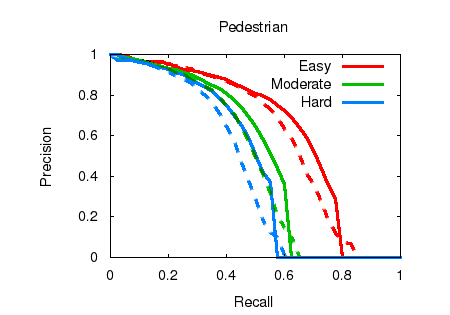

The proposed pedestrian detector is based on the Aggregate Channel Feature detector and the results on this page are obtained by an addition of depth images and retraining the model on the KITTI dataset. The baseline detector has ~40% detection rate, whereas the augmented RGB+depth detector detects ~51% of the pedestrians. It is also notable that the proposed system is outperforming a comparable technique, namely the FusionDPM, by ~4%.

|

|

|

Left: performance on KITTI of the baseline RGB only detector, right: (solid) performance of the proposed detector and (dashed) of FusionDPM. |

|