Any comments to Philippe Serbruyns: or twitter @phiser678.

In our department we have a lot of data to handle. Many researchers have to deal with high volume or bulk data for their research. Examples are videosequences, microscopy, high-spectral images, CT images, etc... To manage this ever growing data, we use the open source filesystem ZFS. ZFS stands for the Zettabyte filesystem, so that's a billion times a Terabyte!

In the beginning we used ext3, ext4 and ntfs which all have designer flaws. ZFS tries to cope with a number of faults in classical filesystem technology. In case you're missing the point, disks are not reliable at all, every disks fails sooner or later!

ZFS has file integrity. It makes a checksum of every block it allocates. This way it can check if a fault has occured, it can also autocorrect if a redundant part is available!

ZFS has it's own RAID system, the buzz word is "software defined storage". ZFS had this from the beginning and that's already more than 12 years! You can choose the redundant data with 1,2 or 3 disks. Only the allocated data has to be checked when an error on a disk is detected. You don't have to invest in expensive RAID controllers either, HBA is enough. You can also move your disk setup to other systems: hop from Linux to FreeBSD, yes, there's even a MacOS port!

Data is written with transactions. This means your filesystem should never be corrupted! It can rollback to a know good state when power failure, and you don't need offline filesystem checks. ZFS can handle filesystem check online, while you filesystem is active. That's right, no downtime!

ZFS is a copy-on-write system. It can save all your changes in a snapshot, instantly.

Replication of data is handled through snapshots. The filesystem knows what has changed and doesn't have to make a lenghty and timely index when you have lots of files to transfer.

You can have live compression of files or diskimages (so called zvol's).

Works with groups of disks (or vdevs). You can have:

More info on wikipedia.

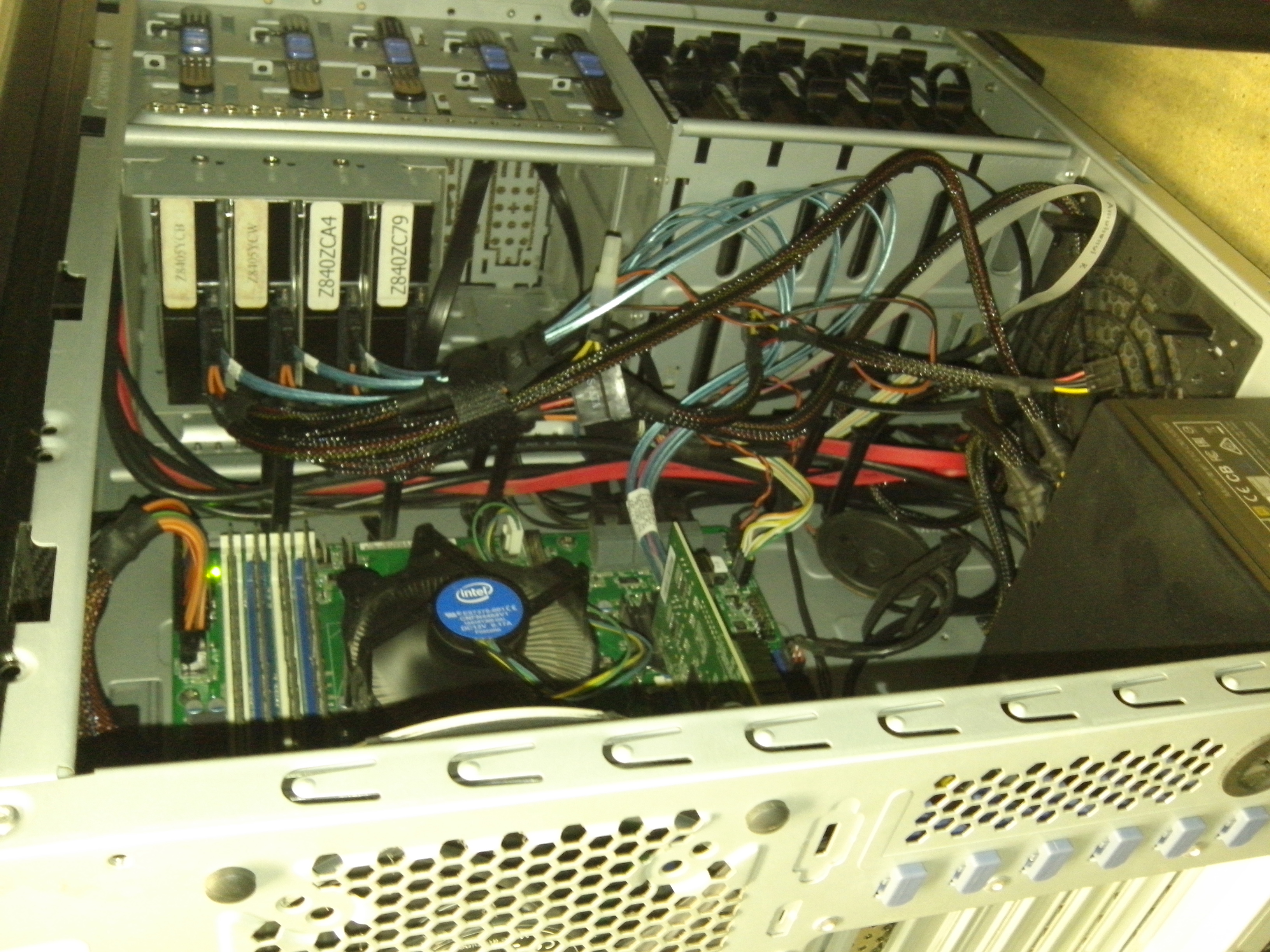

We started with a system of five 8TB disks. We made a raidz1, so this means we can have 1 disk completly out. This gave us net 32TB, but we also used the lz4 compression type to give us even more space. We then added 2 mirrored disks of 3TB which we inherited part of a RAID5 server. As disk usage growed, we added another 2x 8TB mirror. Our tower system is almost full with its 9 disks.

This is a current status:

# zpool status

pool: tank

state: ONLINE

scan: resilvered 25.3G in 0h12m with 0 errors on Fri Dec 22 10:33:12 2017

config:

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

ata-ST8000AS0002-1NA17Z_Z840527Z-part3 ONLINE 0 0 0

ata-ST8000AS0002-1NA17Z_Z8405JVX-part3 ONLINE 0 0 0

ata-ST8000AS0002-1NA17Z_Z8405JX1-part3 ONLINE 0 0 0

ata-ST8000AS0002-1NA17Z_Z8405YCB-part3 ONLINE 0 0 0

ata-ST8000AS0002-1NA17Z_Z8405YCW-part3 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

ata-WDC_WD30EFRX-68EUZN0_WD-WMC4N0524973-part4 ONLINE 0 0 0

ata-WDC_WD30EFRX-68EUZN0_WD-WMC4N0531778-part4 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

ata-ST8000AS0002-1NA17Z_Z840ZC79-part4 ONLINE 0 0 0

ata-ST8000AS0002-1NA17Z_Z840ZCA4-part4 ONLINE 0 0 0

errors: No known data errorsAs you can see in the status, it had detected a bad disk and corrected it with a resilver (25.3GB in 12 minutes with 0 errors, on a total of 32+3+8=43TB!). The system is now 95% full, so the next step will be replacing the two 3TB by 8TB. This is part of our future plan. We would then have all 8TB disks. If we would just replace the two 3TBs, we would have added 5TB (8TB-3TB). The total net would then be 48TB. However, if we would have a raidz1 with 9 disks without the mirrors, we could have 64TB of diskspace and win 16TB extra. Unfortunatly, it is not possible to do this change on a live filesystem. So, we will have to reformat everything! I will keep you updated later when it happens, probably we would also make a raidz2 by adding another disk, so 10 in total.

The system takes regular snapshots, so in case someone accidentally wipes out a file, it can be recovered. But indeed, what happens if something goes wrong with the system itself (e.g. fire, theft or even a faulty erase command of the sysadmin itself)? Right, you will have a big, big problem if you cannot access or lose 48TB of data from all your fellow researchers! Of course, you need a double of the system. This way when you have a terrible accident, you will have a clone of all your data! And since even this backup can go wrong at the same time when your first system is total loss, we implemented a third system on another location!

So, we have to have some syncronization between the systems. It is done by this small script:

#!/bin/bash

version=1.08

do=1

. /root/backup-dblog

source=`hostname -s`

target=$1

shift

speed=33333k

if [ "$source" == "ipids-hogent" ] || [ "$target" == "ipids-hogent" ]; then speed=8888k;fi

for ds in $*;do

tmp=/tmp/transfer-$source-`echo $ds|sed 's/\//_/g'`.log

last=`zfs list -t snapshot -H -o name -r $ds|awk '{s=$1} END {print s}'|sed 's/.*@//'`

previous=`ssh $target zfs list -t snapshot -H -o name -r $ds|awk '{s=$1} END {print s}'|sed 's/.*@//'`

if [ "$previous" == "" ];then

echo "$ds not on target yet."

exec="ssh $target zfs create -p $ds;zfs send $ds@$last|lz4c|pv -q -L $speed|dd bs=4k 2>$tmp|ssh $target lz4c -d\|zfs receive -F $ds"

echo "$exec"

else

if [ "$last" == "$previous" ]; then

exec="sleep 1"

echo "0 bytes" >$tmp

echo "$ds up-to-date"

else

exec="zfs send -i $ds@$previous $ds@$last|lz4c|pv -q -L $speed|dd bs=4k 2>$tmp|ssh $target lz4c -d\|zfs receive -F $ds"

echo "$exec"

fi

fi

tic=`date '+%s'`

[ $do -eq 1 ] && eval $exec

error=$?

toc=`date '+%s'`

transferred=`awk '{s=$1} END {printf("%.0f\n",s/1024/1024)}' $tmp`

log=`/sbin/zdb -dP $ds|awk -F, '{printf("%.0f:0:%.0f\n",$5,$4/1024/1024)}'`

dblog $tic:$toc:$source:$target=$ds:$error:$log:$transferred

doneto be continued...