What are the advantages for the end-users:

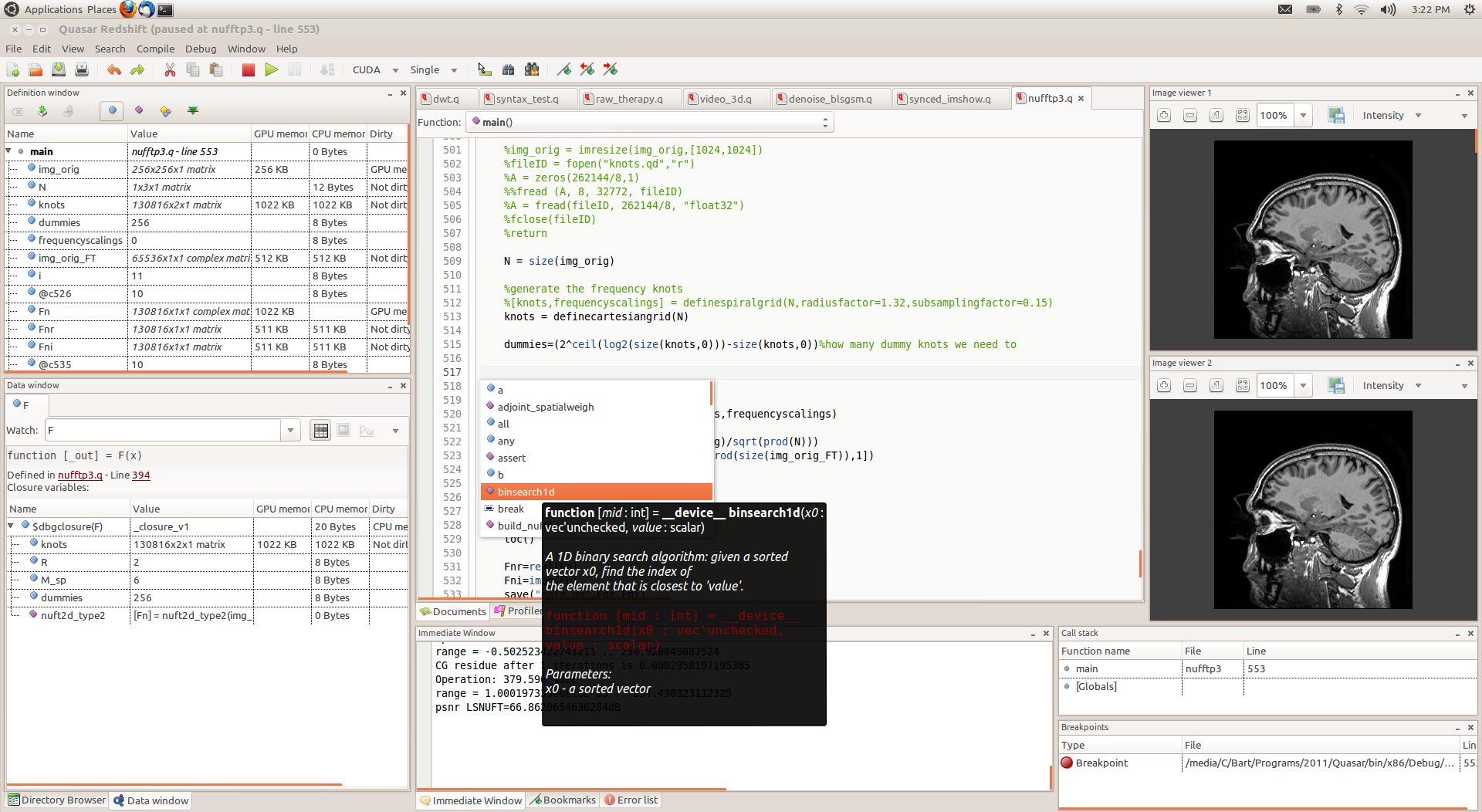

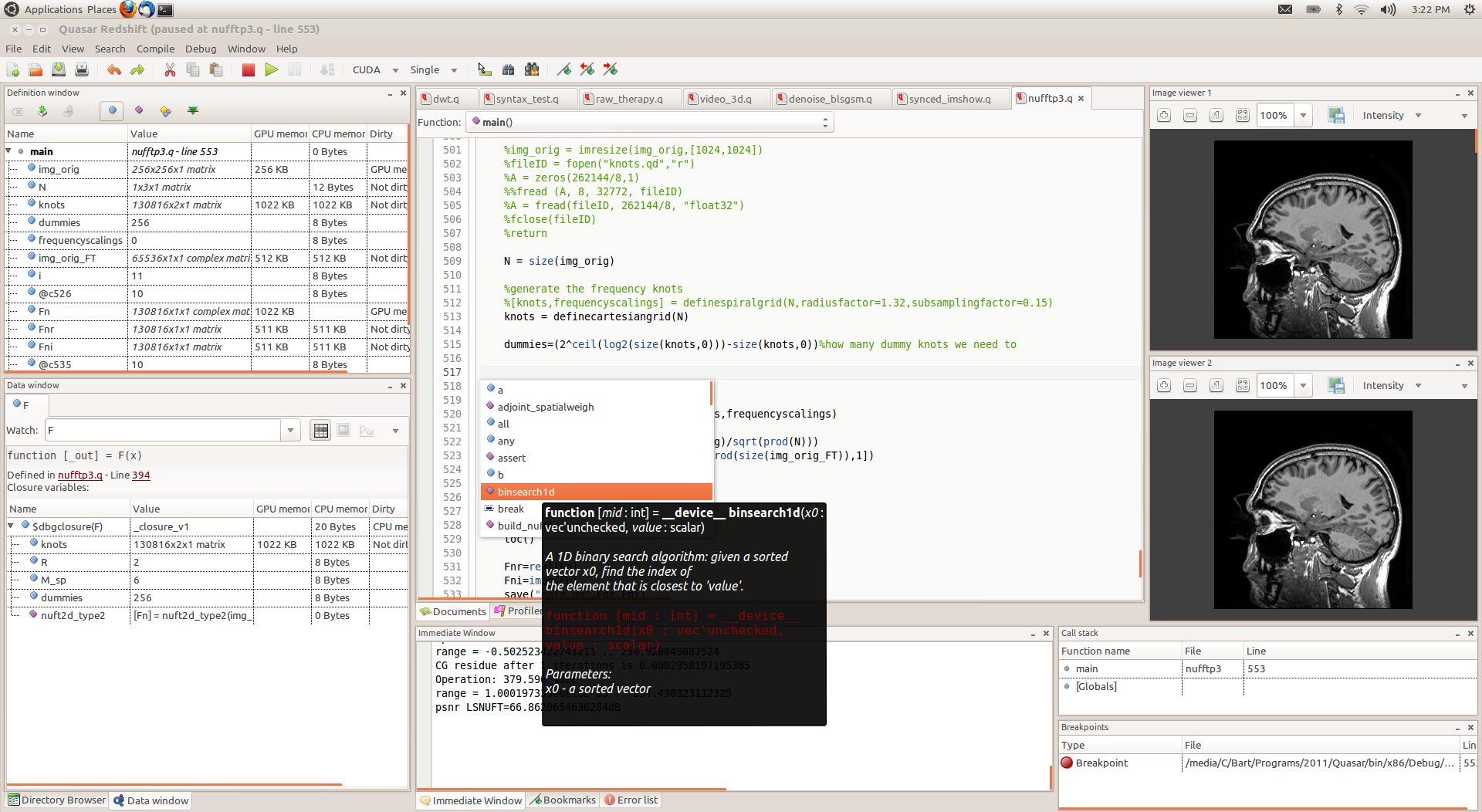

Figure 1. screenshot of the Quasar Redshift IDE, running on Linux Mint.

Below, several document are listed that give a more detailed overview of Quasar and several core aspects of the framework.

| Document name | Description |

| Quasar leaflet | General high-level information on the possibilities of Quasar |

| International Conference on Image Processing paper, 2014 | Our first conference paper and interactive demonstration session on Quasar. The examples highlight the development and debugging facilities of the Quasar Redshift IDE, with an MRI reconstruction demo and a video processing demo. |

| International Conference on Distributed Smart Cameras paper, 2015 | Our second conference paper and interactive demonstration of Quasar. Also see the real-time depth from stereo video below. |

| Quick Reference Manual | The Quick Reference Manual is a document aimed at Quasar end-users. It explains various Quasar programming concepts. |

| CUDA Guide | The CUDA guide explains several CUDA optimization features that are implemented in Quasar and how they can be accessed from user programs. The document shows that actually, most features are enabled transparently, without user interaction. |

See our website: gepura.io/quasar

As a first case, we implemented three algorithms in both directly in CUDA and in Quasar as custom benchmarks, and we compare both the development times and the execution times of the algorithms:

The execution times for a Geforce GTX780M (Kepler), lines of code (LOC) and development times are given in the table below. It can be noted that, for about 3x less code and a significant lower development time, the resulting execution times are very close to the CUDA implementations of the algorithm.

| Test program | CUDA - time (ms) | Quasar - time (ms) | CUDA - LOC | Quasar - LOC | CUDA - Dev time | Quasar - Dev time | Description | Runs of the algorithm |

| filter (1) | 3042.29 | 3051.174 | 140 | 61 | 1h20 | 0h15 | Filter 32 taps, with global memory | 10000 |

| filter (2) | 958.832 | 831.0475 | Filter 32 taps, with shared memory | 10000 | ||||

| surfwrite (1) | 4998.2 | 5056.289 | 195 | 61 | 1h30 | 0h20 | 2D spatial filter 32x32 separable, with global memory | 10000 |

| surfwrite (2) | 2144.71 | 2286.131 | 2D spatial filter 32x32 seperable, with texture & surface memory | 10000 | ||||

| tex4 (1) | 410.23 | 518.0296 | 348 | 120 | 3h50 | 0h30 | wavelet filter, with global memory | 1000 |

| tex4 (2) | 384.548 | 386.0221 | wavelet filter, with global memory & float3 | 1000 | ||||

| tex4 (3) | 486.875 | 352.0201 | wavelet filter, with texture memory (1 component) | 1000 | ||||

| tex4 (4) | 119.801 | 170.0098 | wavelet filter, with texture memory (RGBA) | 1000 |

Comments:

2. A more complex test-case

In a second test-case, an experienced independent researcher at a different university implemented an MRI reconstruction algorithm (parallel MRI reconstruction for spiral grid trajectories) in CUDA in a period of three months. Simultaneously, a researcher of the UGent/IPI research groups 1) learned how to use Quasar from the ground up (he did not use Quasar before) and 2) implemented exactly the same algorithm in Quasar. This was achieved in a period of 10 work days! Below are some computation time results obtained for different data set sizes:| MRI Image size | k-space samples | CUDA developer | Quasar developer |

| 128x128 | 32x128 | 2.0 ms | 1.9 ms |

| 256x256 | 32x256 | 2.0 ms | 2.4 ms |

| 256x256 | 64x256 | 3.0 ms | 2.8 ms |

| 256x256 | 128x256 | 4.0 ms | 3.6 ms |

In this section, we list a number of results that we already have obtained using Quasar. The results are in the domains of image/video processing, computer graphics and biomedical processing, demonstrating several algorithms that have been implemented with success by several researchers at our group, using Quasar.

| 3D tracking of pedestrians from a vehicle |

| Simultaneous localization and mapping using LiDAR data, implemented in Quasar |

| Real-time superpixel segmentation of video. Aimed at automotive applications, each captured frame is analyzed in 2D using a superpixel segmentation algorithm. The idea behind the superpixels is to reduce the dimensions of the input video, by segmenting each frame according to a regular grid (but with variable shapes). Each superpixel can have several attributes assigned (such as the average RGB intensity), that are processed by subsequent processing steps. Because the dimensions are reduced, these processing steps are computationally less complex.

Here, the superpixel segmentation algorithm was implemented in Quasar and the segmentation results are shown. |

| View interpolation demo: based on two images with corresponding depth maps, taken from different camera views, the images of intermediate views (of a "virtual" camera) are generated. This procedure requires image rectification and warping. Furthermore, to deal with object occlusions, inpainting techniques are employed. Despite the complex processing with several passes over the image, only about 500 lines of Quasar code were required and the code runs at 30msec/frame on a Geforce GTX 780M GPU for full HD images. |

| Real-time optical flow using a webcam. Optical flow deals with the estimation of motion vectors, on a pixel-by-pixel basis. Although optical flow techniques are generally computationally quite demanding, often requiring 5-10 or more iterations per frame, with Quasar we could easily obtain an implementation that work in real-time. |

| Real-time depth from stereo. This demo is similar to the view interpolation demo from above, with the difference that 1) the RGB images are captured using a webcam and 2) that the depth maps are recalculated every second using stereovision techniques (more specifically, disparity estimation). |

| Demonstration of a simple volumetric raytracer, developed in Quasar. The raytracer requires about 40-50 lines of Quasar code, and uses automatically the hardware texturing units of the GPU to accelerate the memory access to a 512x512x512 cube. The rendering works flawlessly in real-time starting with Geforce 560 Ti and newer GPUs. |

| Demonstration of volumetric raytracer of a menger sponge (a 3D fractal). The demonstration simulates a camera that is entering the menger sponge structure, which infinitely refines when the camera comes closer. |

| Cell tracking of healing wounds: in the first phase of the video, some cells of interest are being selected. When the user is ready, the video starts and the algorithm starts tracking the cells. |

| Ultrasound segmentation. Here, different areas in an ultrasound video are being segmented using an active contour technique with regularization. Although the technique is computationally demanding (e.g. several seconds/frame in Matlab), the processing is in real-time with Quasar. |

(C) 2017 Ghent University / imec / Gepura. Patented technology under WO patent 2015150342.