Much the same as drawing a person's portrait, estimating the 3D structure of an object is much much harder when the object is not sitting still. The topic of my thesis, estimation of the 3D structure can be done by limiting its movements to be part of a linear embedding in the much higher order space of all plausible point clouds. By only hanging on to a limited number of past reconstructions and measurements, on-line processing and reconstruction is possible.

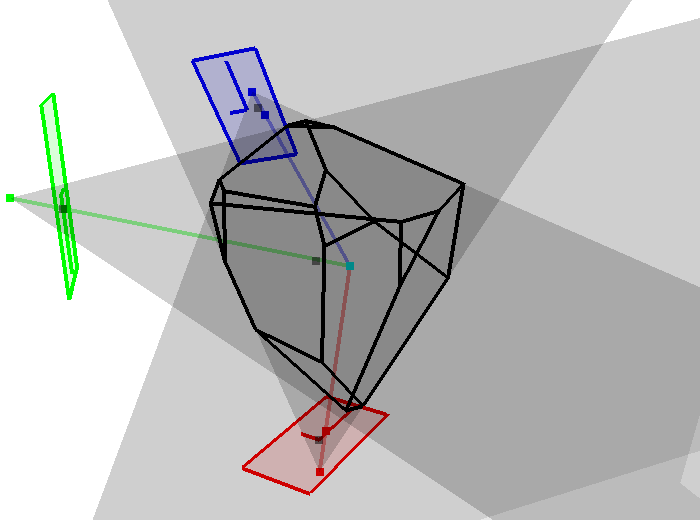

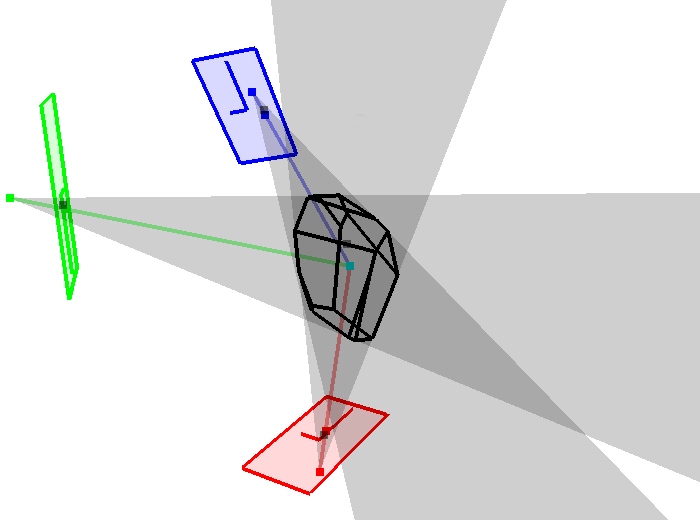

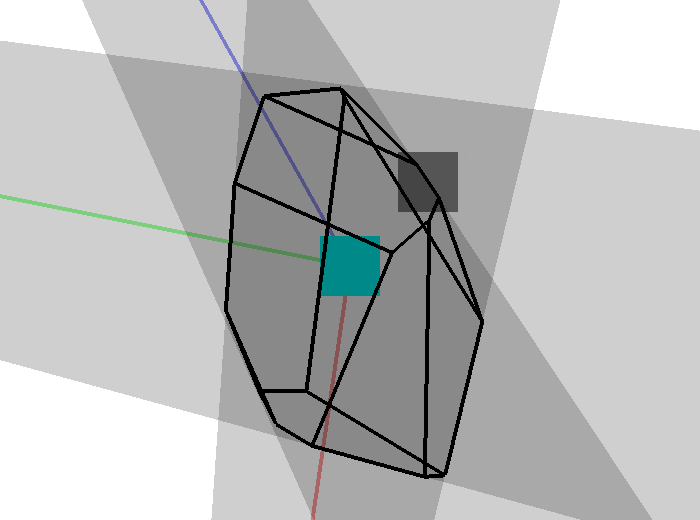

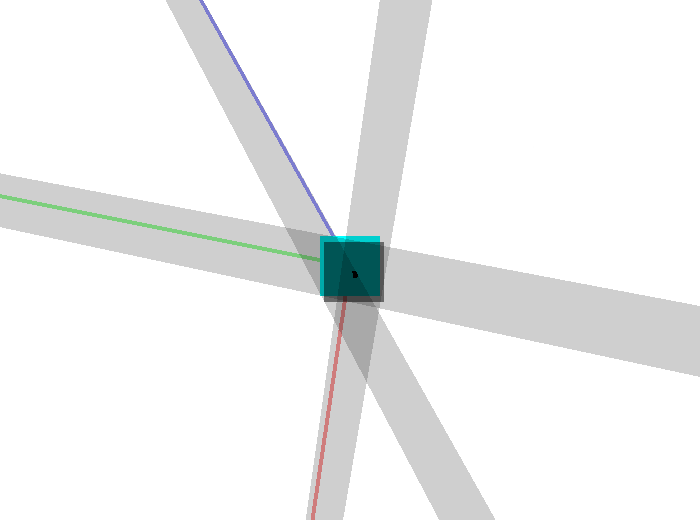

More general problems include camera position estimation, single point triangulation, ... An interesting avenue of research is the use of cost criteria such as the max-norm and the l-0 norm: while these typically are less robust to outliers, they can also be used to detect those outliers, and often result in fast solutions. An example is shown in the video below. At each iteration, the cameras accept a reconstructed 3D point with a maximum error from their measurement. This maximum error is gradually lowered until the cameras only agree on a single point. Through time, the set of points they all accept as plausible reconstructions is a collapsing polyhedron in 3D space.

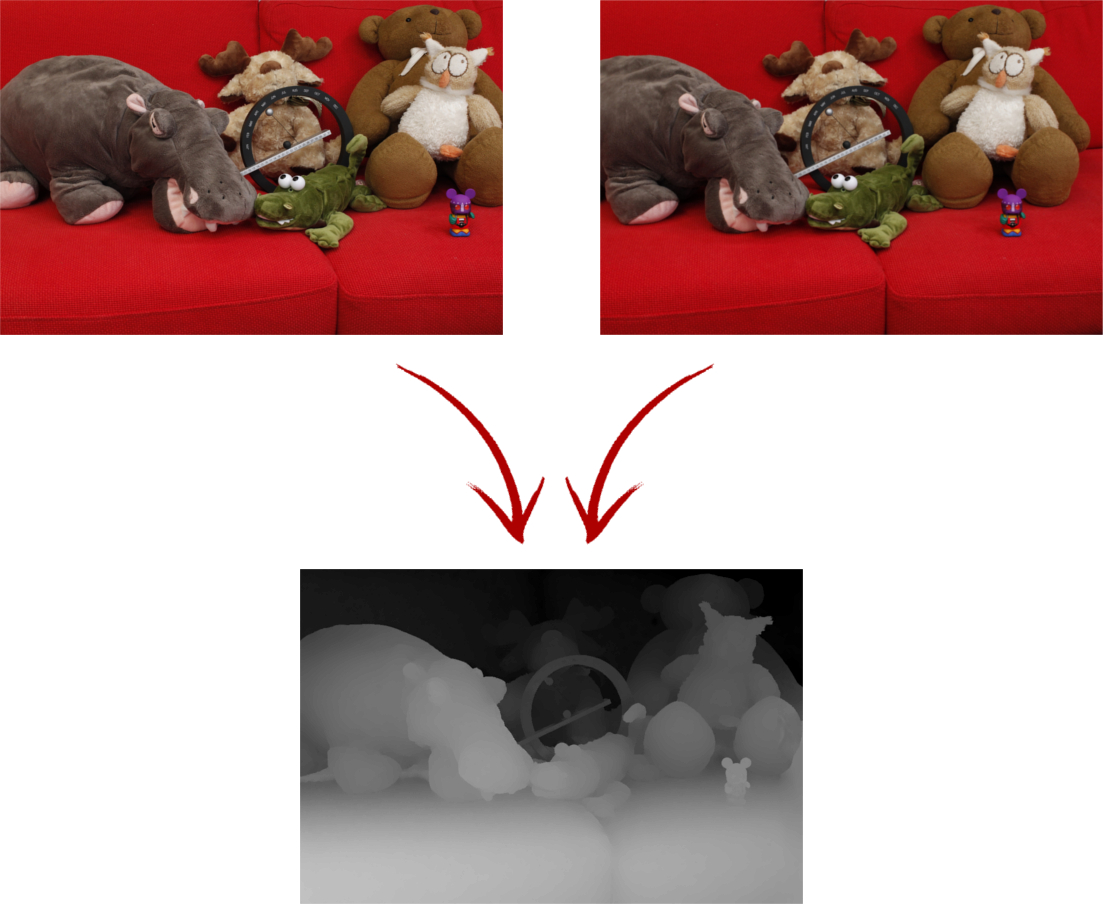

In several settings (think of robotics, obstacle detection, view interpolation) we need to estimate 3D information from the scene, and we need to do it fast. By observing the same scene with two camera's separated slightly on a baseline, 3D information can be estimated. While this is generally slower than active measurements such as LIDAR or Time-of-Flight cameras, the depth estimation is much more dense and we can apply prior knowledge such as the fact that there is no high-frequency content aside from edges. Rather than the structure-from-motion techniques (which work on sparse image features), these methods estimate depth information for every single pixel on the screen!

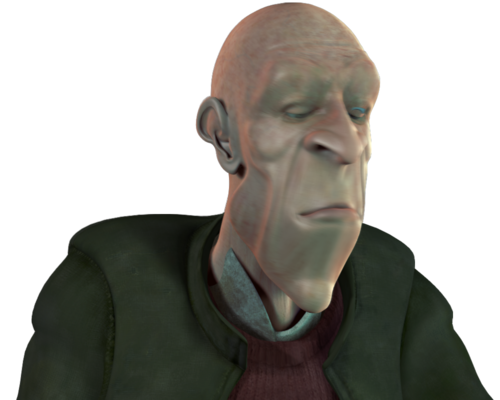

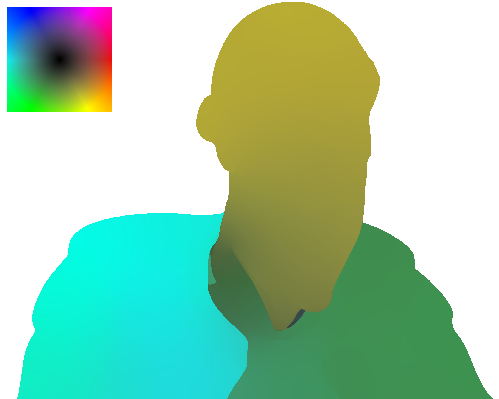

Tracking an object through time is often still a hard task for a computer. One option is to use optical flow, which finds correspondences between two image frames: which pixel moves where? In general, optical flow techniques compare local neighbourhoods and exploit global logic (such as total variation regularisation) to estimate these correspondences.

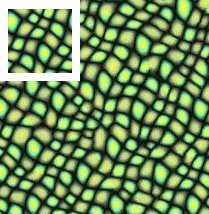

As the resolution on images continues to increase, existing algorithms may cope badly with the increase in input information. Super-pixels form a segmentation of the image with a variable granularity. By performing mean-shift clustering of the 2d image points (assigning them to clusters called super-pixels) based on both their colour and their location, the result is a semi-regular grid of super-pixels on which existing techniques can work with little tweaking. The super-pixels follow the edges of the images well (as long as there is contrast over the edges), which is an important factor for many applications. Recent variations (e.g. gSLIC) are much more parallelizable and allow the use of super-pixels in time-critical applications by performing the clustering on a GPU.

Occasionally, one stumbles on a special type of camera, which has not received much attention from researchers yet. One such camera I encountered during my research was a so-called Linear Pushbroom Camera, which crops up from time to time in hyperspectral systems or when working with satellite imagery. Because of the constraints of the hyperspectral robotics system I was working with at the time, I had to formulate a new calibration approach to accurately estimate and registrate the hyperspectral in the full workflow. To code and publication for this approach will be published soon.

Given the power of GPUs these days, general purpose GPU programming is becoming more and more important. See below for the framework we use for this. Amongst others, I've worked on the efficient simulation of N-body systems. These systems occur in many fields, from molecular dynamics to the behaviour of galaxies. I implemented a parallel algorithm to perform the simulations, which can even make several GPUs work together efficiently! Here's a demo video, too.

I worked on the iMinds/imec BAHAMAS ICON project, in which I was responsible for the entire reconstruction workflow for the PhenoVision robot. Here's a promo video:

Throughout the various research avenues I pursue, the recurring theme is the modelling of the problem to fit into a strong mathematical optimisation framework. While primal-dual optimisation techniques or split-bregman solutions are typical for l-1/l-2 regularised optimization problems, other approaches may be more applicable or more efficient. Particularily, I am working on the use of l∞ norm in multi-view geometry.

Optimisation techniques appear in every single problem, one way or another. Every choice ever made is done based on the same abstract paradigm: which choice is the best? While it is not always easy to quantify how good a given choice is, or whether one choice is better than another, we are ever comparing possibility against alternative.

In optimisation techniques, the goal of the course is to make the students familiar with the most important optimisation problems and solution techniques. With discrete and continuous variables both, the students learn how to model problems mathematically based on a tangible yet practical problem statement. Various techniques are highlighted for solving the modelled problems, as well as theoretically discussing the feasability/optimality of the solutions.

This course consists of lectures, as well as exercise sessions and a mandatory project. This course is given in dutch.

Additional downloads: Here you can find an example of using lpsolve on 32-bit windows.

The creation and manipulation of images using computers is hardly a novel topic, and yet we are constantly finding new applications for the use of computer graphics. Image processing is all about processing, analyzing and classification of natural images stored on a computer. Computer graphics, in constrast, handles synthetic images: their storage, conversions, manipulation, and generation.

Algorithms for texture synthesis, for example, are crucial in all kinds of settings (computer games, movies, virtual reality, ...) At least as important, there is the modelling of 3D scenes and the creation of 2D observations from that scene. Transforming objects in the 3-dimensional space as well as camera models and visibility decisions play an important role in this.

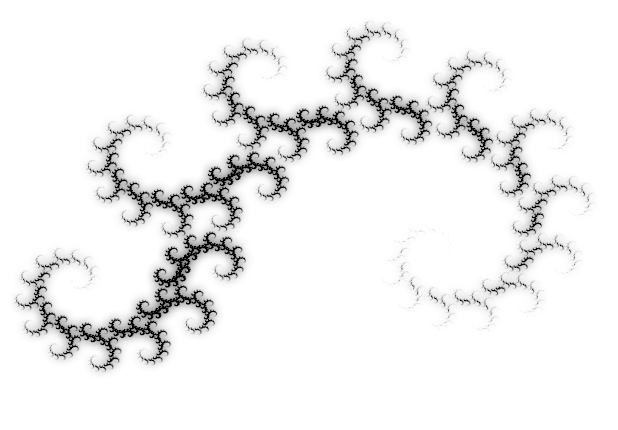

This course consists of lectures and a mandatory project. This course is given in english. I assist the projects on Iterated Function System Fractals and texture generation/transfer.

Much of our research is done in an in-house programming language called Quasar. The computational performance of GPUs has improved significantly, reaching speedups up to 10x or 50x compared to single-threaded CPU execution. This makes the use for machine vision and image processing especially appealing: in such applications we are typically processing millions of pixels in parallel. The part of Quasar in this is the automatic generation of device-specific code: the developer can be, to a large degree, device agnostic. The language itself is a high-level scripting language with an easy-to-learn syntax very similar to that of MATLAB. These scripts are compiled into efficient C++, OpenCL, LLVM and/or CUDA code. An automatic scheduler analyzes the load of all devices and decides at runtime which device performs which task. In this way, the programmer is relieved from the complicated details that often arise when programming for heterogeneous hardware.

If you are interested in Quasar and its features, please check out the following site and paper:

Quasar - a New Heterogeneous Programming Framework for Image and Video Processing Algorithms on CPU and GPU

B. Goossens, J. De Vylder and W. Philips (ICIP2014)

PDF

Here is a snippet of quasar code (this is all you need to write!):

function [] = __kernel__ focus_bw(x, y, focus_spot, falloff, radius, pos)

p = (pos - focus_spot) / max(size(x,0..1))

weight = exp(-1.0 / (0.05 + radius * (2 * dotprod(p,p)) ^ falloff))

rgbval = x[pos[0],pos[1],0..2]

grayval = dotprod(rgbval,[0.3,0.59,0.11])*[1,1,1]

y[pos[0],pos[1],0..2] = lerp(grayval, rgbval, weight)

end

img_in = imread("flowers.jpg")

img_out = zeros(size(img_in))

parallel_do(size(img_out,0..1),img_in,img_out,[256,128],0.5,10,focus_bw)

imshow(img_out)

This results in a well-known Google Picasa effect: Focal Black and White Effect:

My office is located in the technicum building (Sint-Pietersnieuwstraat 41). Take the left entrance (behind the University Forum) and follow the arrows to TELIN. On floor -2.5 (-T), take the skyway to the other building and head up one floor. I can generally be found in the room next to the pc-room, lovingly called the Aquarium.

The easiest way to contact me is to send me an e-mail. The land-line in our office can be used in case of emergency. Please note: due to recent mishaps our secretariat has ceased to support IP over avian carriers.